The potential dark side of AI and GPT-3 writing tools

I have a backlog of topics I've been meaning to write you about.

But today I did an interview with a journalist from The Information, and it resulted in a cascade of thoughts about AI writing tools, AI content in general, and specifically OpenAI's GPT-3 language model.

Buckle up.

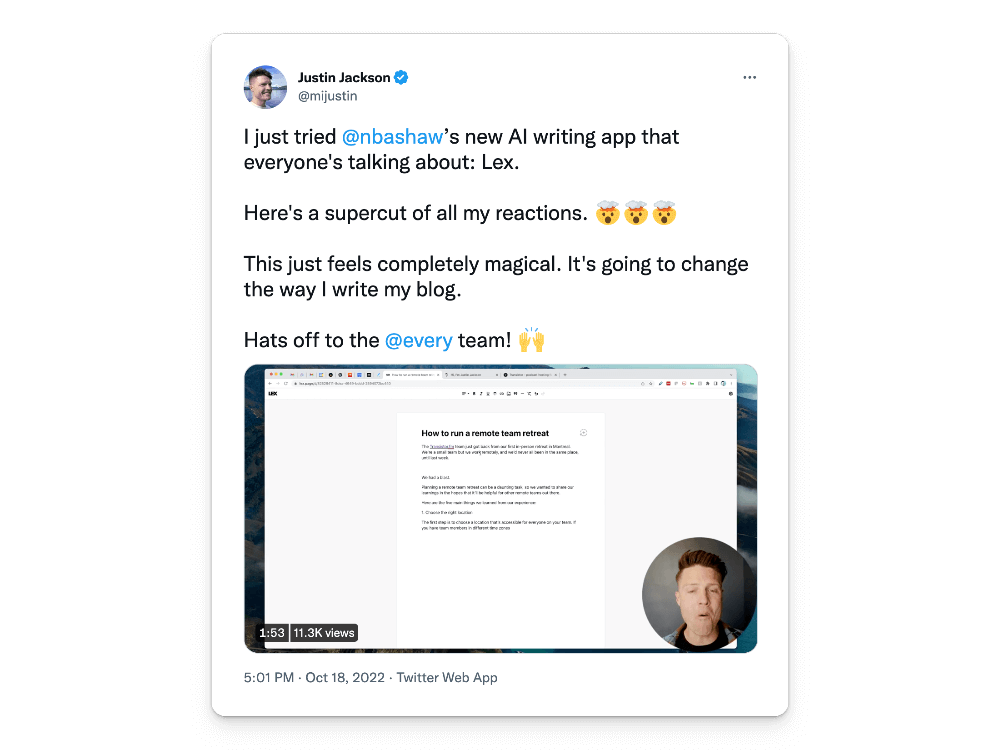

First, some background. A few days ago I tried out Nathan Baschez's new AI-writing assistant called Lex.

I recorded my initial reactions, and (as you'll see) I was pretty enamored with it:

As a small business owner, I was excited about the possibilities. A tool like Lex could help me write content for Transistor.fm more efficiently. An article that would normally take me 2 hours to write (including procrastination) took 20-40 minutes with Lex.

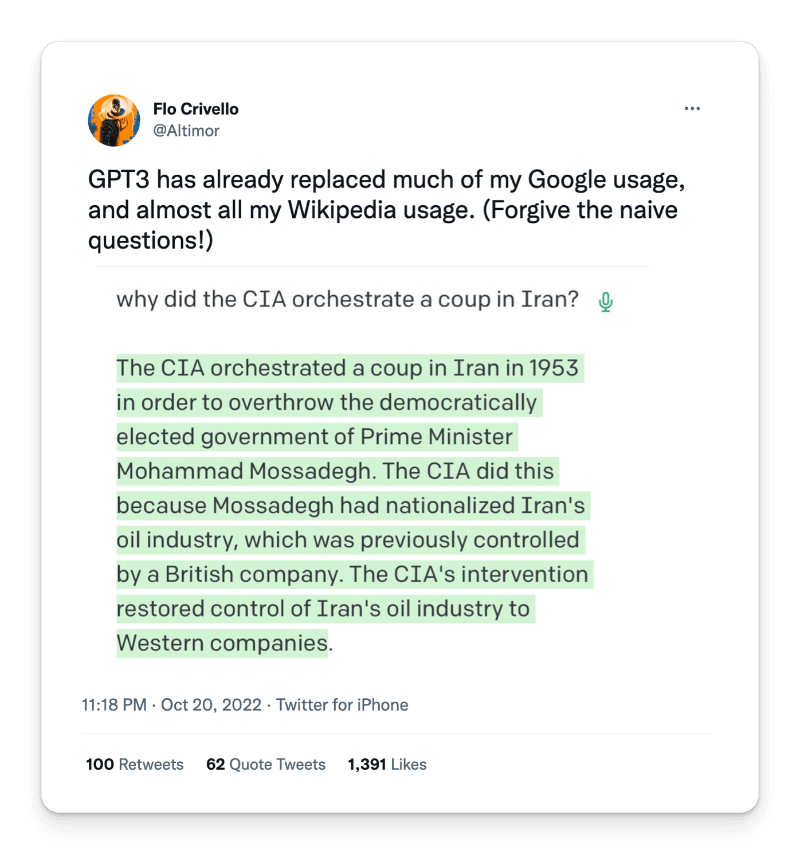

I didn't really consider the deeper implications of this technology until I saw this tweet:

"GPT3 has already replaced much of my Google usage, and almost all my Wikipedia usage."

What happens when people start going to AI for answers instead of Google search and Wikipedia?

How does the AI decide what to suggest?

What are the knock-on effects of these suggestions?

I recorded this quick video to explore some of these questions:

Aside from the bigger issues with propaganda, there are still potential problems for small businesses.

For example, when I asked the AI to suggest content for "best podcast hosting" it suggested three of my competitors!

For many founders and indie makers, the point of these AI-assisted writing tools is SEO: to rank on Google.

But, if people start skipping Google and just go directly to the AI... will we even need to write all this content?

And... will brands start figuring out how to optimize for AI suggestions?

Instead of hiring "SEO consultants" to help us rank on Google, will we hire "language-model consultants" to help us rank on language models like GPT-3?

Cheers,

Justin Jackson

@mijustin